Сравнить коммиты

Нет общих коммитов. «archive/ref-data» и «main» имеют совершенно разные истории.

archive/re

...

main

3

.gitattributes

поставляемый

Обычный файл

@ -0,0 +1,3 @@

|

||||

# correct the language detection on github

|

||||

# exclude data files from linguist analysis

|

||||

notebooks/* linguist-generated

|

||||

11

.github/dependabot.yml

поставляемый

Обычный файл

@ -0,0 +1,11 @@

|

||||

# To get started with Dependabot version updates, you'll need to specify which

|

||||

# package ecosystems to update and where the package manifests are located.

|

||||

# Please see the documentation for all configuration options:

|

||||

# https://docs.github.com/code-security/dependabot/dependabot-version-updates/configuration-options-for-the-dependabot.yml-file

|

||||

|

||||

version: 2

|

||||

updates:

|

||||

- package-ecosystem: "pip"

|

||||

directory: "/" # Location of package manifests

|

||||

schedule:

|

||||

interval: "weekly"

|

||||

43

.github/workflows/ci.yml

поставляемый

Обычный файл

@ -0,0 +1,43 @@

|

||||

name: CI

|

||||

|

||||

|

||||

on:

|

||||

push:

|

||||

branches: [ main ]

|

||||

pull_request:

|

||||

branches: [ main ]

|

||||

workflow_dispatch:

|

||||

|

||||

|

||||

jobs:

|

||||

test:

|

||||

runs-on: ${{ matrix.os }}

|

||||

strategy:

|

||||

fail-fast: false

|

||||

matrix:

|

||||

os: [ubuntu-latest, windows-latest, macos-latest]

|

||||

python-version: ['3.10', '3.11']

|

||||

steps:

|

||||

- name: Checkout repository

|

||||

uses: actions/checkout@v4

|

||||

- name: Set up Python ${{ matrix.python-version }}

|

||||

uses: actions/setup-python@v5

|

||||

with:

|

||||

python-version: ${{ matrix.python-version }}

|

||||

- name: Install dependencies

|

||||

run: |

|

||||

pip install spacy --no-binary blis # do not compile blis from source

|

||||

pip install -e .[dev]

|

||||

- name: Run pytest

|

||||

run: |

|

||||

cd ammico

|

||||

python -m pytest -svv -m "not gcv" --cov=. --cov-report=xml

|

||||

- name: Upload coverage

|

||||

if: matrix.os == 'ubuntu-latest' && matrix.python-version == '3.11'

|

||||

uses: codecov/codecov-action@v3

|

||||

env:

|

||||

CODECOV_TOKEN: ${{ secrets.CODECOV_TOKEN }}

|

||||

with:

|

||||

fail_ci_if_error: false

|

||||

files: ammico/coverage.xml

|

||||

verbose: true

|

||||

36

.github/workflows/docs.yml

поставляемый

Обычный файл

@ -0,0 +1,36 @@

|

||||

name: Pages

|

||||

|

||||

on:

|

||||

push:

|

||||

branches: [ main ]

|

||||

workflow_dispatch:

|

||||

|

||||

jobs:

|

||||

build:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/setup-python@v5

|

||||

with:

|

||||

python-version: '3.11'

|

||||

- uses: actions/checkout@v4

|

||||

with:

|

||||

fetch-depth: 0 # otherwise, you will failed to push refs to dest repo

|

||||

- name: install ammico

|

||||

run: |

|

||||

python -m pip install uv

|

||||

uv pip install --system -e .[dev]

|

||||

- name: set google auth

|

||||

uses: 'google-github-actions/auth@v0.4.0'

|

||||

with:

|

||||

credentials_json: '${{ secrets.GOOGLE_APPLICATION_CREDENTIALS }}'

|

||||

- name: get pandoc

|

||||

run: |

|

||||

sudo apt-get install -y pandoc

|

||||

- name: Build documentation

|

||||

run: |

|

||||

cd docs

|

||||

make html

|

||||

- name: Push changes to gh-pages

|

||||

uses: JamesIves/github-pages-deploy-action@v4

|

||||

with:

|

||||

folder: docs # The folder the action should deploy.

|

||||

77

.github/workflows/release.yml

поставляемый

Обычный файл

@ -0,0 +1,77 @@

|

||||

name: release to pypi

|

||||

|

||||

on:

|

||||

release:

|

||||

types: [published]

|

||||

workflow_dispatch:

|

||||

|

||||

jobs:

|

||||

build:

|

||||

name: Build distribution

|

||||

runs-on: ubuntu-latest

|

||||

|

||||

steps:

|

||||

- name: Checkout repository

|

||||

uses: actions/checkout@v4

|

||||

- name: Set up Python 3.11

|

||||

uses: actions/setup-python@v5

|

||||

with:

|

||||

python-version: "3.11"

|

||||

- name: install pypa/build

|

||||

run: >-

|

||||

python -m

|

||||

pip install

|

||||

build

|

||||

--user

|

||||

- name: Build distribution

|

||||

run: python -m build

|

||||

- name: store the dist packages

|

||||

uses: actions/upload-artifact@v4

|

||||

with:

|

||||

name: python-package-distributions

|

||||

path: dist/

|

||||

|

||||

publish-to-pypi:

|

||||

name: Publish to PyPI

|

||||

if: startsWith(github.ref, 'refs/tags/')

|

||||

needs:

|

||||

- build

|

||||

runs-on: ubuntu-latest

|

||||

environment:

|

||||

name: pypi

|

||||

url: https://pypi.org/p/ammico

|

||||

permissions:

|

||||

id-token: write

|

||||

steps:

|

||||

- name: Download all dists

|

||||

uses: actions/download-artifact@v4

|

||||

with:

|

||||

name: python-package-distributions

|

||||

path: dist/

|

||||

- name: publish dist to pypi

|

||||

uses: pypa/gh-action-pypi-publish@release/v1

|

||||

|

||||

publish-to-testpypi:

|

||||

name: Publish Python distribution to TestPyPI

|

||||

if: startsWith(github.ref, 'refs/tags/')

|

||||

needs:

|

||||

- build

|

||||

runs-on: ubuntu-latest

|

||||

|

||||

environment:

|

||||

name: testpypi

|

||||

url: https://test.pypi.org/p/ammico

|

||||

permissions:

|

||||

id-token: write # IMPORTANT: mandatory for trusted publishing

|

||||

|

||||

steps:

|

||||

- name: Download all the dists

|

||||

uses: actions/download-artifact@v4

|

||||

with:

|

||||

name: python-package-distributions

|

||||

path: dist/

|

||||

- name: Publish distribution 📦 to TestPyPI

|

||||

uses: pypa/gh-action-pypi-publish@release/v1

|

||||

with:

|

||||

repository-url: https://test.pypi.org/legacy/

|

||||

|

||||

132

.gitignore

поставляемый

Обычный файл

@ -0,0 +1,132 @@

|

||||

# Byte-compiled / optimized / DLL files

|

||||

__pycache__/

|

||||

*.py[cod]

|

||||

*$py.class

|

||||

|

||||

# C extensions

|

||||

*.so

|

||||

|

||||

# Distribution / packaging

|

||||

.Python

|

||||

build/

|

||||

develop-eggs/

|

||||

dist/

|

||||

downloads/

|

||||

eggs/

|

||||

.eggs/

|

||||

lib/

|

||||

lib64/

|

||||

parts/

|

||||

sdist/

|

||||

var/

|

||||

wheels/

|

||||

pip-wheel-metadata/

|

||||

share/python-wheels/

|

||||

*.egg-info/

|

||||

.installed.cfg

|

||||

*.egg

|

||||

MANIFEST

|

||||

|

||||

# PyInstaller

|

||||

# Usually these files are written by a python script from a template

|

||||

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

||||

*.manifest

|

||||

*.spec

|

||||

|

||||

# Installer logs

|

||||

pip-log.txt

|

||||

pip-delete-this-directory.txt

|

||||

|

||||

# Unit test / coverage reports

|

||||

htmlcov/

|

||||

.tox/

|

||||

.nox/

|

||||

.coverage

|

||||

.coverage.*

|

||||

.cache

|

||||

nosetests.xml

|

||||

coverage.xml

|

||||

*.cover

|

||||

*.py,cover

|

||||

.hypothesis/

|

||||

.pytest_cache/

|

||||

|

||||

# Translations

|

||||

*.mo

|

||||

*.pot

|

||||

|

||||

# Django stuff:

|

||||

*.log

|

||||

local_settings.py

|

||||

db.sqlite3

|

||||

db.sqlite3-journal

|

||||

|

||||

# Flask stuff:

|

||||

instance/

|

||||

.webassets-cache

|

||||

|

||||

# Scrapy stuff:

|

||||

.scrapy

|

||||

|

||||

# Sphinx documentation

|

||||

docs/_build/

|

||||

|

||||

# PyBuilder

|

||||

target/

|

||||

|

||||

# Jupyter Notebook

|

||||

.ipynb_checkpoints

|

||||

|

||||

# IPython

|

||||

profile_default/

|

||||

ipython_config.py

|

||||

|

||||

# pyenv

|

||||

.python-version

|

||||

|

||||

# pipenv

|

||||

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

||||

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

||||

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

||||

# install all needed dependencies.

|

||||

#Pipfile.lock

|

||||

|

||||

# PEP 582; used by e.g. github.com/David-OConnor/pyflow

|

||||

__pypackages__/

|

||||

|

||||

# Celery stuff

|

||||

celerybeat-schedule

|

||||

celerybeat.pid

|

||||

|

||||

# SageMath parsed files

|

||||

*.sage.py

|

||||

|

||||

# Environments

|

||||

.env

|

||||

.venv

|

||||

env/

|

||||

venv/

|

||||

ENV/

|

||||

env.bak/

|

||||

venv.bak/

|

||||

|

||||

# Spyder project settings

|

||||

.spyderproject

|

||||

.spyproject

|

||||

|

||||

# Rope project settings

|

||||

.ropeproject

|

||||

|

||||

# mkdocs documentation

|

||||

/site

|

||||

|

||||

# mypy

|

||||

.mypy_cache/

|

||||

.dmypy.json

|

||||

dmypy.json

|

||||

|

||||

# Pyre type checker

|

||||

.pyre/

|

||||

|

||||

# data folder

|

||||

/data/

|

||||

0

.gitmodules

поставляемый

Обычный файл

14

.pre-commit-config.yaml

Обычный файл

@ -0,0 +1,14 @@

|

||||

repos:

|

||||

- repo: https://github.com/kynan/nbstripout

|

||||

rev: 0.8.1

|

||||

hooks:

|

||||

- id: nbstripout

|

||||

files: ".ipynb"

|

||||

- repo: https://github.com/astral-sh/ruff-pre-commit

|

||||

# Ruff version.

|

||||

rev: v0.13.3

|

||||

hooks:

|

||||

# Run the linter.

|

||||

- id: ruff-check

|

||||

# Run the formatter.

|

||||

- id: ruff-format

|

||||

49

CITATION.cff

Обычный файл

@ -0,0 +1,49 @@

|

||||

cff-version: 1.2.0

|

||||

title: >-

|

||||

AMMICO, an AI-based Media and Misinformation Content

|

||||

Analysis Tool

|

||||

message: >-

|

||||

If you use this software, please cite it using the

|

||||

metadata from this file.

|

||||

type: software

|

||||

authors:

|

||||

- family-names: "Dumitrescu"

|

||||

given-names: "Delia"

|

||||

orcid: "https://orcid.org/0000-0002-0065-3875"

|

||||

- family-names: "Ulusoy"

|

||||

given-names: "Inga"

|

||||

orcid: "https://orcid.org/0000-0001-7294-4148"

|

||||

- family-names: "Andriushchenko"

|

||||

given-names: "Petr"

|

||||

orcid: "https://orcid.org/0000-0002-4518-6588"

|

||||

- family-names: "Daskalakis"

|

||||

given-names: "Gwydion"

|

||||

orcid: "https://orcid.org/0000-0002-7557-1364"

|

||||

- family-names: "Kempf"

|

||||

given-names: "Dominic"

|

||||

orcid: "https://orcid.org/0000-0002-6140-2332"

|

||||

- family-names: "Ma"

|

||||

given-names: "Xianghe"

|

||||

identifiers:

|

||||

- type: doi

|

||||

value: 10.5117/CCR2025.1.3.DUMI

|

||||

repository-code: 'https://github.com/ssciwr/AMMICO'

|

||||

url: 'https://ssciwr.github.io/AMMICO/build/html/index.html'

|

||||

abstract: >-

|

||||

ammico (AI-based Media and Misinformation Content Analysis

|

||||

Tool) is a publicly available software package written in

|

||||

Python 3, whose purpose is the simultaneous evaluation of

|

||||

the text and graphical content of image files. After

|

||||

describing the software features, we provide an assessment

|

||||

of its performance using a multi-country, multi-language

|

||||

data set containing COVID-19 social media disinformation

|

||||

posts. We conclude by highlighting the tool’s advantages

|

||||

for communication research.

|

||||

keywords:

|

||||

- nlp

|

||||

- translation

|

||||

- computer-vision

|

||||

- text-extraction

|

||||

- classification

|

||||

- social media

|

||||

license: MIT

|

||||

36

CONTRIBUTING.md

Обычный файл

@ -0,0 +1,36 @@

|

||||

# Contributing to ammico

|

||||

|

||||

Welcome to `ammico`! Contributions to the package are welcome. Please adhere to the following conventions:

|

||||

|

||||

- fork the repository, make your changes, and make sure your changes pass all the tests (Sonarcloud, unit and integration tests, codecoverage limits); then open a Pull Request for your changes. Tag one of `ammico`'s developers for review.

|

||||

- install and use the pre-commit hooks by running `pre-commit install` in the repository directory so that all your changes adhere to the PEP8 style guide and black code formatting

|

||||

- make sure to update the documentation if applicable

|

||||

|

||||

The tests are located in `ammico/tests`. Unit tests are named `test` following an underscore and the name of the module; inside the unit test modules, each test function is named `test` followed by an underscore and the name of the function/method that is being tested.

|

||||

|

||||

To report bugs and issues, please [open an issue](https://github.com/ssciwr/ammico/issues) describing what you did, what you expected to happen, and what actually happened. Please provide information about the environment as well as OS.

|

||||

|

||||

For any questions and comments, feel free to post to our [Discussions forum]((https://github.com/ssciwr/AMMICO/discussions/151)).

|

||||

|

||||

**Thank you for contributing to `ammico`!**

|

||||

|

||||

## Templates

|

||||

### Template for pull requests

|

||||

|

||||

- issues that are addressed by this PR: [*For example, this closes #33 or this addresses #29*]

|

||||

|

||||

- changes that were made: [*For example, updated version of dependencies or added a file type for input reading*]

|

||||

|

||||

- if applicable: Follow-up work that is required

|

||||

|

||||

### Template for bug report

|

||||

|

||||

- what I did:

|

||||

|

||||

- what I expected:

|

||||

|

||||

- what actually happened:

|

||||

|

||||

- Python version and environment:

|

||||

|

||||

- Operating system:

|

||||

23

Dockerfile

Обычный файл

@ -0,0 +1,23 @@

|

||||

FROM jupyter/base-notebook

|

||||

|

||||

# Install system dependencies for computer vision packages

|

||||

USER root

|

||||

RUN apt update && apt install -y build-essential libgl1 libglib2.0-0 libsm6 libxext6 libxrender1 \

|

||||

&& rm -rf /var/lib/apt/lists/*

|

||||

USER $NB_USER

|

||||

|

||||

# Copy the repository into the container

|

||||

COPY --chown=${NB_UID} . /opt/ammico

|

||||

|

||||

# Install the Python package

|

||||

RUN python -m pip install /opt/ammico

|

||||

|

||||

# Make JupyterLab the default for this application

|

||||

ENV JUPYTER_ENABLE_LAB=yes

|

||||

|

||||

# Export where the data is located

|

||||

ENV XDG_DATA_HOME=/opt/ammico/data

|

||||

|

||||

# Copy notebooks into the home directory

|

||||

RUN rm -rf "$HOME"/work && \

|

||||

cp /opt/ammico/notebooks/*.ipynb "$HOME"

|

||||

106

FAQ.md

Обычный файл

@ -0,0 +1,106 @@

|

||||

# FAQ

|

||||

|

||||

## Compatibility problems solving

|

||||

|

||||

Some ammico components require `tensorflow` (e.g. Emotion detector), some `pytorch` (e.g. Summary detector). Sometimes there are compatibility problems between these two frameworks. To avoid these problems on your machines, you can prepare proper environment before installing the package (you need conda on your machine):

|

||||

|

||||

### 1. First, install tensorflow (https://www.tensorflow.org/install/pip)

|

||||

- create a new environment with python and activate it

|

||||

|

||||

```conda create -n ammico_env python=3.10```

|

||||

|

||||

```conda activate ammico_env```

|

||||

- install cudatoolkit from conda-forge

|

||||

|

||||

``` conda install -c conda-forge cudatoolkit=11.8.0```

|

||||

- install nvidia-cudnn-cu11 from pip

|

||||

|

||||

```python -m pip install nvidia-cudnn-cu11==8.6.0.163```

|

||||

- add script that runs when conda environment `ammico_env` is activated to put the right libraries on your LD_LIBRARY_PATH

|

||||

|

||||

```

|

||||

mkdir -p $CONDA_PREFIX/etc/conda/activate.d

|

||||

echo 'CUDNN_PATH=$(dirname $(python -c "import nvidia.cudnn;print(nvidia.cudnn.__file__)"))' >> $CONDA_PREFIX/etc/conda/activate.d/env_vars.sh

|

||||

echo 'export LD_LIBRARY_PATH=$CUDNN_PATH/lib:$CONDA_PREFIX/lib/:$LD_LIBRARY_PATH' >> $CONDA_PREFIX/etc/conda/activate.d/env_vars.sh

|

||||

source $CONDA_PREFIX/etc/conda/activate.d/env_vars.sh

|

||||

```

|

||||

- deactivate and re-activate conda environment to call script above

|

||||

|

||||

```conda deactivate```

|

||||

|

||||

```conda activate ammico_env ```

|

||||

|

||||

- install tensorflow

|

||||

|

||||

```python -m pip install tensorflow==2.12.1```

|

||||

|

||||

### 2. Second, install pytorch

|

||||

|

||||

- install pytorch for same cuda version as above

|

||||

|

||||

```python -m pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118```

|

||||

|

||||

### 3. After we prepared right environment we can install the ```ammico``` package

|

||||

|

||||

- ```python -m pip install ammico```

|

||||

|

||||

It is done.

|

||||

|

||||

### Micromamba

|

||||

If you are using micromamba you can prepare environment with just one command:

|

||||

|

||||

```micromamba create --no-channel-priority -c nvidia -c pytorch -c conda-forge -n ammico_env "python=3.10" pytorch torchvision torchaudio pytorch-cuda "tensorflow-gpu<=2.12.3" "numpy<=1.23.4"```

|

||||

|

||||

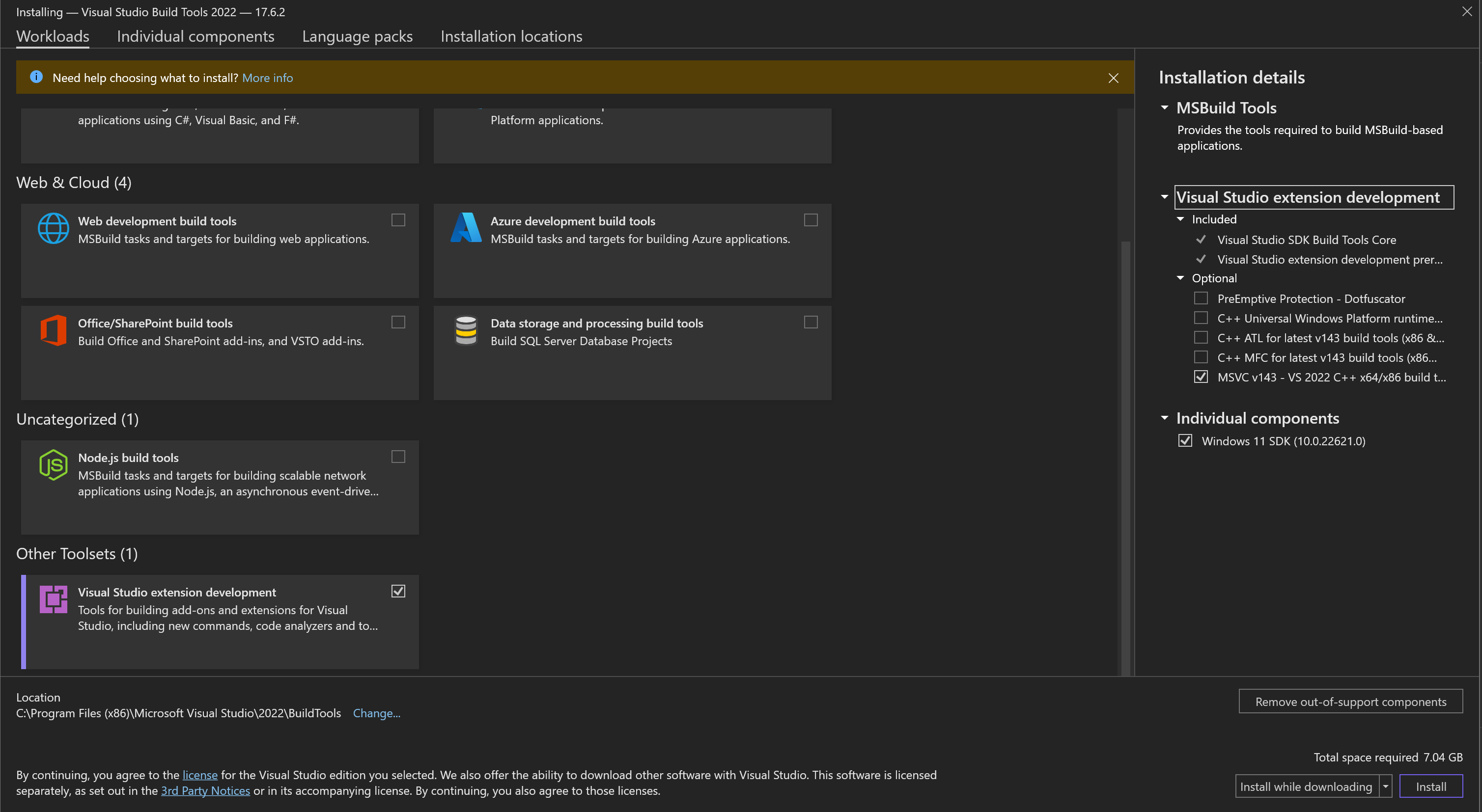

### Windows

|

||||

|

||||

To make pycocotools work on Windows OS you may need to install `vs_BuildTools.exe` from https://visualstudio.microsoft.com/visual-cpp-build-tools/ and choose following elements:

|

||||

- `Visual Studio extension development`

|

||||

- `MSVC v143 - VS 2022 C++ x64/x86 build tools`

|

||||

- `Windows 11 SDK` for Windows 11 (or `Windows 10 SDK` for Windows 10)

|

||||

|

||||

Be careful, it requires around 7 GB of disk space.

|

||||

|

||||

|

||||

|

||||

## What happens to the images that are sent to google Cloud Vision?

|

||||

|

||||

You have to accept the privacy statement of ammico to run this type of analyis.

|

||||

|

||||

According to the [google Vision API](https://cloud.google.com/vision/docs/data-usage), the images that are uploaded and analysed are not stored and not shared with third parties:

|

||||

|

||||

> We won't make the content that you send available to the public. We won't share the content with any third party. The content is only used by Google as necessary to provide the Vision API service. Vision API complies with the Cloud Data Processing Addendum.

|

||||

|

||||

> For online (immediate response) operations (`BatchAnnotateImages` and `BatchAnnotateFiles`), the image data is processed in memory and not persisted to disk.

|

||||

For asynchronous offline batch operations (`AsyncBatchAnnotateImages` and `AsyncBatchAnnotateFiles`), we must store that image for a short period of time in order to perform the analysis and return the results to you. The stored image is typically deleted right after the processing is done, with a failsafe Time to live (TTL) of a few hours.

|

||||

Google also temporarily logs some metadata about your Vision API requests (such as the time the request was received and the size of the request) to improve our service and combat abuse.

|

||||

|

||||

## What happens to the text that is sent to google Translate?

|

||||

|

||||

You have to accept the privacy statement of ammico to run this type of analyis.

|

||||

|

||||

According to [google Translate](https://cloud.google.com/translate/data-usage), the data is not stored after processing and not made available to third parties:

|

||||

|

||||

> We will not make the content of the text that you send available to the public. We will not share the content with any third party. The content of the text is only used by Google as necessary to provide the Cloud Translation API service. Cloud Translation API complies with the Cloud Data Processing Addendum.

|

||||

|

||||

> When you send text to Cloud Translation API, text is held briefly in-memory in order to perform the translation and return the results to you.

|

||||

|

||||

## What happens if I don't have internet access - can I still use ammico?

|

||||

|

||||

Some features of ammico require internet access; a general answer to this question is not possible, some services require an internet connection, others can be used offline:

|

||||

|

||||

- Text extraction: To extract text from images, and translate the text, the data needs to be processed by google Cloud Vision and google Translate, which run in the cloud. Without internet access, text extraction and translation is not possible.

|

||||

- Image summary and query: After an initial download of the models, the `summary` module does not require an internet connection.

|

||||

- Facial expressions: After an initial download of the models, the `faces` module does not require an internet connection.

|

||||

- Multimodal search: After an initial download of the models, the `multimodal_search` module does not require an internet connection.

|

||||

- Color analysis: The `color` module does not require an internet connection.

|

||||

|

||||

## Why don't I get probabilistic assessments of age, gender and race when running the Emotion Detector?

|

||||

Due to well documented biases in the detection of minorities with computer vision tools, and to the ethical implications of such detection, these parts of the tool are not directly made available to users. To access these capabilities, users must first agree with a ethical disclosure statement that reads:

|

||||

|

||||

"DeepFace and RetinaFace provide wrappers to trained models in face recognition and emotion detection. Age, gender and race/ethnicity models were trained on the backbone of VGG-Face with transfer learning.

|

||||

|

||||

ETHICAL DISCLOSURE STATEMENT:

|

||||

|

||||

The Emotion Detector uses DeepFace and RetinaFace to probabilistically assess the gender, age and race of the detected faces. Such assessments may not reflect how the individuals identify. Additionally, the classification is carried out in simplistic categories and contains only the most basic classes (for example, “male” and “female” for gender, and seven non-overlapping categories for ethnicity). To access these probabilistic assessments, you must therefore agree with the following statement: “I understand the ethical and privacy implications such assessments have for the interpretation of the results and that this analysis may result in personal and possibly sensitive data, and I wish to proceed.”

|

||||

|

||||

This disclosure statement is included as a separate line of code early in the flow of the Emotion Detector. Once the user has agreed with the statement, further data analyses will also include these assessments.

|

||||

21

LICENSE

Обычный файл

@ -0,0 +1,21 @@

|

||||

MIT License

|

||||

|

||||

Copyright (c) 2022 SSC

|

||||

|

||||

Permission is hereby granted, free of charge, to any person obtaining a copy

|

||||

of this software and associated documentation files (the "Software"), to deal

|

||||

in the Software without restriction, including without limitation the rights

|

||||

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

||||

copies of the Software, and to permit persons to whom the Software is

|

||||

furnished to do so, subject to the following conditions:

|

||||

|

||||

The above copyright notice and this permission notice shall be included in all

|

||||

copies or substantial portions of the Software.

|

||||

|

||||

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

||||

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

||||

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

||||

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

||||

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

||||

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

||||

SOFTWARE.

|

||||

84

README.md

Обычный файл

@ -0,0 +1,84 @@

|

||||

# AMMICO - AI-based Media and Misinformation Content Analysis Tool

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

[](https://colab.research.google.com/github/ssciwr/ammico/blob/main/ammico/notebooks/DemoNotebook_ammico.ipynb)

|

||||

|

||||

This package extracts data from images such as social media posts that contain an image part and a text part. The analysis can generate a very large number of features, depending on the user input. See [our paper](https://dx.doi.org/10.31235/osf.io/v8txj) for a more in-depth description.

|

||||

|

||||

**_This project is currently under development!_**

|

||||

|

||||

Use pre-processed image files such as social media posts with comments and process to collect information:

|

||||

1. Text extraction from the images

|

||||

1. Language detection

|

||||

1. Translation into English or other languages

|

||||

1. Cleaning of the text, spell-check

|

||||

1. Sentiment analysis

|

||||

1. Named entity recognition

|

||||

1. Topic analysis

|

||||

1. Content extraction from the images

|

||||

1. Textual summary of the image content ("image caption") that can be analyzed further using the above tools

|

||||

1. Feature extraction from the images: User inputs query and images are matched to that query (both text and image query)

|

||||

1. Question answering

|

||||

1. Performing person and face recognition in images

|

||||

1. Face mask detection

|

||||

1. Probabilistic detection of age, gender and race

|

||||

1. Emotion recognition

|

||||

1. Color analysis

|

||||

1. Analyse hue and percentage of color on image

|

||||

1. Multimodal analysis

|

||||

1. Find best matches for image content or image similarity

|

||||

1. Cropping images to remove comments from posts

|

||||

|

||||

## Installation

|

||||

|

||||

The `AMMICO` package can be installed using pip:

|

||||

```

|

||||

pip install ammico

|

||||

```

|

||||

This will install the package and its dependencies locally. If after installation you get some errors when running some modules, please follow the instructions in the [FAQ](https://ssciwr.github.io/AMMICO/build/html/faq_link.html).

|

||||

|

||||

## Usage

|

||||

|

||||

The main demonstration notebook can be found in the `notebooks` folder and also on google colab: [](https://colab.research.google.com/github/ssciwr/ammico/blob/main/ammico/notebooks/DemoNotebook_ammico.ipynb)

|

||||

|

||||

There are further sample notebooks in the `notebooks` folder for the more experimental features:

|

||||

1. Topic analysis: Use the notebook `get-text-from-image.ipynb` to analyse the topics of the extraced text.\

|

||||

**You can run this notebook on google colab: [](https://colab.research.google.com/github/ssciwr/ammico/blob/main/ammico/notebooks/get-text-from-image.ipynb)**

|

||||

Place the data files and google cloud vision API key in your google drive to access the data.

|

||||

1. To crop social media posts use the `cropposts.ipynb` notebook.

|

||||

**You can run this notebook on google colab: [](https://colab.research.google.com/github/ssciwr/ammico/blob/main/ammico/notebooks/cropposts.ipynb)**

|

||||

|

||||

## Features

|

||||

### Text extraction

|

||||

The text is extracted from the images using [google-cloud-vision](https://cloud.google.com/vision). For this, you need an API key. Set up your google account following the instructions on the google Vision AI website or as described [here](https://ssciwr.github.io/AMMICO/build/html/create_API_key_link.html).

|

||||

You then need to export the location of the API key as an environment variable:

|

||||

```

|

||||

export GOOGLE_APPLICATION_CREDENTIALS="location of your .json"

|

||||

```

|

||||

The extracted text is then stored under the `text` key (column when exporting a csv).

|

||||

|

||||

[Googletrans](https://py-googletrans.readthedocs.io/en/latest/) is used to recognize the language automatically and translate into English. The text language and translated text is then stored under the `text_language` and `text_english` key (column when exporting a csv).

|

||||

|

||||

If you further want to analyse the text, you have to set the `analyse_text` keyword to `True`. In doing so, the text is then processed using [spacy](https://spacy.io/) (tokenized, part-of-speech, lemma, ...). The English text is cleaned from numbers and unrecognized words (`text_clean`), spelling of the English text is corrected (`text_english_correct`), and further sentiment and subjectivity analysis are carried out (`polarity`, `subjectivity`). The latter two steps are carried out using [TextBlob](https://textblob.readthedocs.io/en/dev/index.html). For more information on the sentiment analysis using TextBlob see [here](https://towardsdatascience.com/my-absolute-go-to-for-sentiment-analysis-textblob-3ac3a11d524).

|

||||

|

||||

The [Hugging Face transformers library](https://huggingface.co/) is used to perform another sentiment analysis, a text summary, and named entity recognition, using the `transformers` pipeline.

|

||||

|

||||

### Content extraction

|

||||

|

||||

The image content ("caption") is extracted using the [LAVIS](https://github.com/salesforce/LAVIS) library. This library enables vision intelligence extraction using several state-of-the-art models such as BLIP and BLIP2, depending on the task and user selection. Further, it allows feature extraction from the images, where users can input textual and image queries, and the images in the database are matched to that query (multimodal search). Another option is question answering, where the user inputs a text question and the library finds the images that match the query.

|

||||

|

||||

### Emotion recognition

|

||||

|

||||

Emotion recognition is carried out using the [deepface](https://github.com/serengil/deepface) and [retinaface](https://github.com/serengil/retinaface) libraries. These libraries detect the presence of faces, as well as provide probabilistic assessment of their age, gender, race, and emotion based on several state-of-the-art models. It is also detected if the person is wearing a face mask - if they are, then no further detection is carried out as the mask affects the assessment acuracy. Because the detection of gender, race and age is carried out in simplistic categories (e.g., for gender, using only "male" and "female"), and because of the ethical implications of such assessments, users can only access this part of the tool if they agree with an ethical disclosure statement (see FAQ). Moreover, once users accept the disclosure, they can further set their own detection confidence threshholds.

|

||||

|

||||

### Color/hue detection

|

||||

|

||||

Color detection is carried out using [colorgram.py](https://github.com/obskyr/colorgram.py) and [colour](https://github.com/vaab/colour) for the distance metric. The colors can be classified into the main named colors/hues in the English language, that are red, green, blue, yellow, cyan, orange, purple, pink, brown, grey, white, black.

|

||||

|

||||

### Cropping of posts

|

||||

|

||||

Social media posts can automatically be cropped to remove further comments on the page and restrict the textual content to the first comment only.

|

||||

23

ammico/__init__.py

Обычный файл

@ -0,0 +1,23 @@

|

||||

from ammico.display import AnalysisExplorer

|

||||

from ammico.faces import EmotionDetector, ethical_disclosure

|

||||

from ammico.text import TextDetector, TextAnalyzer, privacy_disclosure

|

||||

from ammico.utils import find_files, get_dataframe

|

||||

|

||||

# Export the version defined in project metadata

|

||||

try:

|

||||

from importlib.metadata import version

|

||||

|

||||

__version__ = version("ammico")

|

||||

except ImportError:

|

||||

__version__ = "unknown"

|

||||

|

||||

__all__ = [

|

||||

"AnalysisExplorer",

|

||||

"EmotionDetector",

|

||||

"TextDetector",

|

||||

"TextAnalyzer",

|

||||

"find_files",

|

||||

"get_dataframe",

|

||||

"ethical_disclosure",

|

||||

"privacy_disclosure",

|

||||

]

|

||||

145

ammico/colors.py

Обычный файл

@ -0,0 +1,145 @@

|

||||

import numpy as np

|

||||

import webcolors

|

||||

import colorgram

|

||||

import colour

|

||||

from ammico.utils import get_color_table, AnalysisMethod

|

||||

|

||||

COLOR_SCHEMES = [

|

||||

"CIE 1976",

|

||||

"CIE 1994",

|

||||

"CIE 2000",

|

||||

"CMC",

|

||||

"ITP",

|

||||

"CAM02-LCD",

|

||||

"CAM02-SCD",

|

||||

"CAM02-UCS",

|

||||

"CAM16-LCD",

|

||||

"CAM16-SCD",

|

||||

"CAM16-UCS",

|

||||

"DIN99",

|

||||

]

|

||||

|

||||

|

||||

class ColorDetector(AnalysisMethod):

|

||||

def __init__(

|

||||

self,

|

||||

subdict: dict,

|

||||

delta_e_method: str = "CIE 1976",

|

||||

) -> None:

|

||||

"""Color Analysis class, analyse hue and identify named colors.

|

||||

|

||||

Args:

|

||||

subdict (dict): The dictionary containing the image path.

|

||||

delta_e_method (str): The calculation method used for assigning the

|

||||

closest color name, defaults to "CIE 1976".

|

||||

The available options are: 'CIE 1976', 'CIE 1994', 'CIE 2000',

|

||||

'CMC', 'ITP', 'CAM02-LCD', 'CAM02-SCD', 'CAM02-UCS', 'CAM16-LCD',

|

||||

'CAM16-SCD', 'CAM16-UCS', 'DIN99'

|

||||

"""

|

||||

super().__init__(subdict)

|

||||

self.subdict.update(self.set_keys())

|

||||

self.merge_color = True

|

||||

self.n_colors = 100

|

||||

if delta_e_method not in COLOR_SCHEMES:

|

||||

raise ValueError(

|

||||

"Invalid selection for assigning the color name. Please select one of {}".format(

|

||||

COLOR_SCHEMES

|

||||

)

|

||||

)

|

||||

self.delta_e_method = delta_e_method

|

||||

|

||||

def set_keys(self) -> dict:

|

||||

colors = {

|

||||

"red": 0,

|

||||

"green": 0,

|

||||

"blue": 0,

|

||||

"yellow": 0,

|

||||

"cyan": 0,

|

||||

"orange": 0,

|

||||

"purple": 0,

|

||||

"pink": 0,

|

||||

"brown": 0,

|

||||

"grey": 0,

|

||||

"white": 0,

|

||||

"black": 0,

|

||||

}

|

||||

return colors

|

||||

|

||||

def analyse_image(self):

|

||||

"""

|

||||

Uses the colorgram library to extract the n most common colors from the images.

|

||||

One problem is, that the most common colors are taken before beeing categorized,

|

||||

so for small values it might occur that the ten most common colors are shades of grey,

|

||||

while other colors are present but will be ignored. Because of this n_colors=100 was chosen as default.

|

||||

|

||||

The colors are then matched to the closest color in the CSS3 color list using the delta-e metric.

|

||||

They are then merged into one data frame.

|

||||

The colors can be reduced to a smaller list of colors using the get_color_table function.

|

||||

These colors are: "red", "green", "blue", "yellow","cyan", "orange", "purple", "pink", "brown", "grey", "white", "black".

|

||||

|

||||

Returns:

|

||||

dict: Dictionary with color names as keys and percentage of color in image as values.

|

||||

"""

|

||||

filename = self.subdict["filename"]

|

||||

|

||||

colors = colorgram.extract(filename, self.n_colors)

|

||||

for color in colors:

|

||||

rgb_name = self.rgb2name(

|

||||

color.rgb,

|

||||

merge_color=self.merge_color,

|

||||

delta_e_method=self.delta_e_method,

|

||||

)

|

||||

self.subdict[rgb_name] += color.proportion

|

||||

|

||||

# ensure color rounding

|

||||

for key in self.set_keys().keys():

|

||||

if self.subdict[key]:

|

||||

self.subdict[key] = round(self.subdict[key], 2)

|

||||

|

||||

return self.subdict

|

||||

|

||||

def rgb2name(

|

||||

self, c, merge_color: bool = True, delta_e_method: str = "CIE 1976"

|

||||

) -> str:

|

||||

"""Take an rgb color as input and return the closest color name from the CSS3 color list.

|

||||

|

||||

Args:

|

||||

c (Union[List,tuple]): RGB value.

|

||||

merge_color (bool, Optional): Whether color name should be reduced, defaults to True.

|

||||

Returns:

|

||||

str: Closest matching color name.

|

||||

"""

|

||||

if len(c) != 3:

|

||||

raise ValueError("Input color must be a list or tuple of length 3 (RGB).")

|

||||

|

||||

h_color = "#{:02x}{:02x}{:02x}".format(int(c[0]), int(c[1]), int(c[2]))

|

||||

try:

|

||||

output_color = webcolors.hex_to_name(h_color, spec="css3")

|

||||

output_color = output_color.lower().replace("grey", "gray")

|

||||

except ValueError:

|

||||

delta_e_lst = []

|

||||

filtered_colors = webcolors._definitions._CSS3_NAMES_TO_HEX

|

||||

|

||||

for _, img_hex in filtered_colors.items():

|

||||

cur_clr = webcolors.hex_to_rgb(img_hex)

|

||||

# calculate color Delta-E

|

||||

delta_e = colour.delta_E(c, cur_clr, method=delta_e_method)

|

||||

delta_e_lst.append(delta_e)

|

||||

# find lowest delta-e

|

||||

min_diff = np.argsort(delta_e_lst)[0]

|

||||

output_color = (

|

||||

str(list(filtered_colors.items())[min_diff][0])

|

||||

.lower()

|

||||

.replace("grey", "gray")

|

||||

)

|

||||

|

||||

# match color to reduced list:

|

||||

if merge_color:

|

||||

for reduced_key, reduced_color_sub_list in get_color_table().items():

|

||||

if str(output_color).lower() in [

|

||||

str(color_name).lower()

|

||||

for color_name in reduced_color_sub_list["ColorName"]

|

||||

]:

|

||||

output_color = reduced_key.lower()

|

||||

break

|

||||

return output_color

|

||||

24

ammico/data/Color_tables.csv

Обычный файл

@ -0,0 +1,24 @@

|

||||

Pink;Pink;purple;purple;red;red;orange;orange;yellow;yellow;green;green;cyan;cyan;blue;blue;brown;brown;white;white;grey;grey;black;black

|

||||

ColorName;HEX;ColorName;HEX;ColorName;HEX;ColorName;HEX;ColorName;HEX;ColorName;HEX;ColorName;HEX;ColorName;HEX;ColorName;HEX;ColorName;HEX;ColorName;HEX;ColorName;HEX

|

||||

Pink;#FFC0CB;Lavender;#E6E6FA;LightSalmon;#FFA07A;Orange;#FFA500;Gold;#FFD700;GreenYellow;#ADFF2F;Aqua;#00FFFF;CadetBlue;#5F9EA0;Cornsilk;#FFF8DC;White;#FFFFFF;Gainsboro;#DCDCDC;Black;#000000

|

||||

LightPink;#FFB6C1;Thistle;#D8BFD8;Salmon;#FA8072;DarkOrange;#FF8C00;Yellow;#FFFF00;Chartreuse;#7FFF00;Cyan;#00FFFF;SteelBlue;#4682B4;BlanchedAlmond;#FFEBCD;Snow;#FFFAFA;LightGray;#D3D3D3;;

|

||||

HotPink;#FF69B4;Plum;#DDA0DD;DarkSalmon;#E9967A;Coral;#FF7F50;LightYellow;#FFFFE0;LawnGreen;#7CFC00;LightCyan;#E0FFFF;LightSteelBlue;#B0C4DE;Bisque;#FFE4C4;HoneyDew;#F0FFF0;Silver;#C0C0C0;;

|

||||

DeepPink;#FF1493;Orchid;#DA70D6;LightCoral;#F08080;Tomato;#FF6347;LemonChiffon;#FFFACD;Lime;#00FF00;PaleTurquoise;#AFEEEE;LightBlue;#ADD8E6;NavajoWhite;#FFDEAD;MintCream;#F5FFFA;DarkGray;#A9A9A9;;

|

||||

PaleVioletRed;#DB7093;Violet;#EE82EE;IndianRed;#CD5C5C;OrangeRed;#FF4500;LightGoldenRodYellow;#FAFAD2;LimeGreen;#32CD32;Aquamarine;#7FFFD4;PowderBlue;#B0E0E6;Wheat;#F5DEB3;Azure;#F0FFFF;DimGray;#696969;;

|

||||

MediumVioletRed;#C71585;Fuchsia;#FF00FF;Crimson;#DC143C;;;PapayaWhip;#FFEFD5;PaleGreen;#98FB98;Turquoise;#40E0D0;LightSkyBlue;#87CEFA;BurlyWood;#DEB887;AliceBlue;#F0F8FF;Gray;#808080;;

|

||||

;;Magenta;#FF00FF;Red;#FF0000;;;Moccasin;#FFE4B5;LightGreen;#90EE90;MediumTurquoise;#48D1CC;SkyBlue;#87CEEB;Tan;#D2B48C;GhostWhite;#F8F8FF;LightSlateGray;#778899;;

|

||||

;;MediumOrchid;#BA55D3;FireBrick;#B22222;;;PeachPuff;#FFDAB9;MediumSpringGreen;#00FA9A;DarkTurquoise;#00CED1;CornflowerBlue;#6495ED;RosyBrown;#BC8F8F;WhiteSmoke;#F5F5F5;SlateGray;#708090;;

|

||||

;;DarkOrchid;#9932CC;DarkRed;#8B0000;;;PaleGoldenRod;#EEE8AA;SpringGreen;#00FF7F;;;DeepSkyBlue;#00BFFF;SandyBrown;#F4A460;SeaShell;#FFF5EE;DarkSlateGray;#2F4F4F;;

|

||||

;;DarkViolet;#9400D3;;;;;Khaki;#F0E68C;MediumSeaGreen;#3CB371;;;DodgerBlue;#1E90FF;GoldenRod;#DAA520;Beige;#F5F5DC;;;;

|

||||

;;BlueViolet;#8A2BE2;;;;;DarkKhaki;#BDB76B;SeaGreen;#2E8B57;;;RoyalBlue;#4169E1;DarkGoldenRod;#B8860B;OldLace;#FDF5E6;;;;

|

||||

;;DarkMagenta;#8B008B;;;;;;;ForestGreen;#228B22;;;Blue;#0000FF;Peru;#CD853F;FloralWhite;#FFFAF0;;;;

|

||||

;;Purple;#800080;;;;;;;Green;#008000;;;MediumBlue;#0000CD;Chocolate;#D2691E;Ivory;#FFFFF0;;;;

|

||||

;;MediumPurple;#9370DB;;;;;;;DarkGreen;#006400;;;DarkBlue;#00008B;Olive;#808000;AntiqueWhite;#FAEBD7;;;;

|

||||

;;MediumSlateBlue;#7B68EE;;;;;;;YellowGreen;#9ACD32;;;Navy;#000080;SaddleBrown;#8B4513;Linen;#FAF0E6;;;;

|

||||

;;SlateBlue;#6A5ACD;;;;;;;OliveDrab;#6B8E23;;;MidnightBlue;#191970;Sienna;#A0522D;LavenderBlush;#FFF0F5;;;;

|

||||

;;DarkSlateBlue;#483D8B;;;;;;;DarkOliveGreen;#556B2F;;;;;Brown;#A52A2A;MistyRose;#FFE4E1;;;;

|

||||

;;RebeccaPurple;#663399;;;;;;;MediumAquaMarine;#66CDAA;;;;;Maroon;#800000;;;;;;

|

||||

;;Indigo;#4B0082;;;;;;;DarkSeaGreen;#8FBC8F;;;;;;;;;;;;

|

||||

;;;;;;;;;;LightSeaGreen;#20B2AA;;;;;;;;;;;;

|

||||

;;;;;;;;;;DarkCyan;#008B8B;;;;;;;;;;;;

|

||||

;;;;;;;;;;Teal;#008080;;;;;;;;;;;;

|

||||

|

482

ammico/display.py

Обычный файл

@ -0,0 +1,482 @@

|

||||

import ammico.faces as faces

|

||||

import ammico.text as text

|

||||

import ammico.colors as colors

|

||||

import pandas as pd

|

||||

from dash import html, Input, Output, dcc, State, Dash

|

||||

from PIL import Image

|

||||

import dash_bootstrap_components as dbc

|

||||

|

||||

|

||||

COLOR_SCHEMES = [

|

||||

"CIE 1976",

|

||||

"CIE 1994",

|

||||

"CIE 2000",

|

||||

"CMC",

|

||||

"ITP",

|

||||

"CAM02-LCD",

|

||||

"CAM02-SCD",

|

||||

"CAM02-UCS",

|

||||

"CAM16-LCD",

|

||||

"CAM16-SCD",

|

||||

"CAM16-UCS",

|

||||

"DIN99",

|

||||

]

|

||||

SUMMARY_ANALYSIS_TYPE = ["summary_and_questions", "summary", "questions"]

|

||||

SUMMARY_MODEL = ["base", "large"]

|

||||

|

||||

|

||||

class AnalysisExplorer:

|

||||

def __init__(self, mydict: dict) -> None:

|

||||

"""Initialize the AnalysisExplorer class to create an interactive

|

||||

visualization of the analysis results.

|

||||

|

||||

Args:

|

||||

mydict (dict): A nested dictionary containing image data for all images.

|

||||

|

||||

"""

|

||||

self.app = Dash(__name__, external_stylesheets=[dbc.themes.BOOTSTRAP])

|

||||

self.mydict = mydict

|

||||

self.theme = {

|

||||

"scheme": "monokai",

|

||||

"author": "wimer hazenberg (http://www.monokai.nl)",

|

||||

"base00": "#272822",

|

||||

"base01": "#383830",

|

||||

"base02": "#49483e",

|

||||

"base03": "#75715e",

|

||||

"base04": "#a59f85",

|

||||

"base05": "#f8f8f2",

|

||||

"base06": "#f5f4f1",

|

||||

"base07": "#f9f8f5",

|

||||

"base08": "#f92672",

|

||||

"base09": "#fd971f",

|

||||

"base0A": "#f4bf75",

|

||||

"base0B": "#a6e22e",

|

||||

"base0C": "#a1efe4",

|

||||

"base0D": "#66d9ef",

|

||||

"base0E": "#ae81ff",

|

||||

"base0F": "#cc6633",

|

||||

}

|

||||

|

||||

# Setup the layout

|

||||

app_layout = html.Div(

|

||||

[

|

||||

# Top row, only file explorer

|

||||

dbc.Row(

|

||||

[dbc.Col(self._top_file_explorer(mydict))],

|

||||

id="Div_top",

|

||||

style={

|

||||

"width": "30%",

|

||||

},

|

||||

),

|

||||

# second row, middle picture and right output

|

||||

dbc.Row(

|

||||

[

|

||||

# first column: picture

|

||||

dbc.Col(self._middle_picture_frame()),

|

||||

dbc.Col(self._right_output_json()),

|

||||

]

|

||||

),

|

||||

],

|

||||

# style={"width": "95%", "display": "inline-block"},

|

||||

)

|

||||

self.app.layout = app_layout

|

||||

|

||||

# Add callbacks to the app

|

||||

self.app.callback(

|

||||

Output("img_middle_picture_id", "src"),

|

||||

Input("left_select_id", "value"),

|

||||

prevent_initial_call=True,

|

||||

)(self.update_picture)

|

||||

|

||||

self.app.callback(

|

||||

Output("right_json_viewer", "children"),

|

||||

Input("button_run", "n_clicks"),

|

||||

State("left_select_id", "options"),

|

||||

State("left_select_id", "value"),

|

||||

State("Dropdown_select_Detector", "value"),

|

||||

State("setting_Text_analyse_text", "value"),

|

||||

State("setting_privacy_env_var", "value"),

|

||||

State("setting_Emotion_emotion_threshold", "value"),

|

||||

State("setting_Emotion_race_threshold", "value"),

|

||||

State("setting_Emotion_gender_threshold", "value"),

|

||||

State("setting_Emotion_env_var", "value"),

|

||||

State("setting_Color_delta_e_method", "value"),

|

||||

prevent_initial_call=True,

|

||||

)(self._right_output_analysis)

|

||||

|

||||

self.app.callback(

|

||||

Output("settings_TextDetector", "style"),

|

||||

Output("settings_EmotionDetector", "style"),

|

||||

Output("settings_ColorDetector", "style"),

|

||||

Input("Dropdown_select_Detector", "value"),

|

||||

)(self._update_detector_setting)

|

||||

|

||||

# I split the different sections into subfunctions for better clarity

|

||||

def _top_file_explorer(self, mydict: dict) -> html.Div:

|

||||

"""Initialize the file explorer dropdown for selecting the file to be analyzed.

|

||||

|

||||

Args:

|

||||

mydict (dict): A dictionary containing image data.

|

||||

|

||||

Returns:

|

||||

html.Div: The layout for the file explorer dropdown.

|

||||

"""

|

||||

left_layout = html.Div(

|

||||

[

|

||||

dcc.Dropdown(

|

||||

options={value["filename"]: key for key, value in mydict.items()},

|

||||

id="left_select_id",

|

||||

)

|

||||

]

|

||||

)

|

||||

return left_layout

|

||||

|

||||

def _middle_picture_frame(self) -> html.Div:

|

||||

"""Initialize the picture frame to display the image.

|

||||

|

||||

Returns:

|

||||

html.Div: The layout for the picture frame.

|

||||

"""

|

||||

middle_layout = html.Div(

|

||||

[

|

||||

html.Img(

|

||||

id="img_middle_picture_id",

|

||||

style={

|

||||

"width": "80%",

|

||||

},

|

||||

)

|

||||

]

|

||||

)

|

||||

return middle_layout

|

||||

|

||||

def _create_setting_layout(self):

|

||||

settings_layout = html.Div(

|

||||

[

|

||||

# text summary start

|

||||

html.Div(

|

||||

id="settings_TextDetector",

|

||||

style={"display": "none"},

|

||||

children=[

|

||||

dbc.Row(

|

||||

dcc.Checklist(

|

||||

["Analyse text"],

|

||||

["Analyse text"],

|

||||

id="setting_Text_analyse_text",

|

||||

style={"margin-bottom": "10px"},

|

||||

),

|

||||

),

|

||||

# row 1

|

||||

dbc.Row(

|

||||

dbc.Col(

|

||||

[

|

||||

html.P(

|

||||

"Privacy disclosure acceptance environment variable"

|

||||

),

|

||||

dcc.Input(

|

||||

type="text",

|

||||

value="PRIVACY_AMMICO",

|

||||

id="setting_privacy_env_var",

|

||||

style={"width": "100%"},

|

||||

),

|

||||

],

|

||||

align="start",

|

||||

),

|

||||

),

|

||||

],

|

||||

), # text summary end

|

||||

# start emotion detector

|

||||

html.Div(

|

||||

id="settings_EmotionDetector",

|

||||

style={"display": "none"},

|

||||

children=[

|

||||

dbc.Row(

|

||||

[

|

||||

dbc.Col(

|

||||

[

|

||||

html.P("Emotion threshold"),

|

||||

dcc.Input(

|

||||

value=50,

|

||||

type="number",

|

||||

max=100,

|

||||

min=0,

|

||||

id="setting_Emotion_emotion_threshold",

|

||||

style={"width": "100%"},

|

||||

),

|

||||

],

|

||||

align="start",

|

||||

),

|

||||

dbc.Col(

|

||||

[

|

||||

html.P("Race threshold"),

|

||||

dcc.Input(

|

||||

type="number",

|

||||

value=50,

|

||||

max=100,

|

||||

min=0,

|

||||

id="setting_Emotion_race_threshold",

|

||||

style={"width": "100%"},

|

||||

),

|

||||

],

|

||||

align="start",

|

||||

),

|

||||

dbc.Col(

|

||||

[

|

||||

html.P("Gender threshold"),

|

||||

dcc.Input(

|

||||

type="number",

|

||||

value=50,

|

||||

max=100,

|

||||

min=0,

|

||||

id="setting_Emotion_gender_threshold",

|

||||

style={"width": "100%"},

|

||||

),

|

||||

],

|

||||

align="start",

|

||||

),

|

||||

dbc.Col(

|

||||

[

|

||||

html.P(

|

||||

"Disclosure acceptance environment variable"

|

||||

),

|

||||

dcc.Input(

|

||||

type="text",

|

||||

value="DISCLOSURE_AMMICO",

|

||||

id="setting_Emotion_env_var",

|

||||

style={"width": "100%"},

|

||||

),

|

||||

],

|

||||

align="start",

|

||||

),

|

||||

],

|

||||

style={"width": "100%"},

|

||||

),

|

||||

],

|

||||

), # end emotion detector

|

||||

html.Div(

|

||||

id="settings_ColorDetector",

|

||||

style={"display": "none"},

|

||||

children=[

|

||||

html.Div(

|

||||

[

|

||||

dcc.Dropdown(

|

||||

options=COLOR_SCHEMES,

|

||||

value="CIE 1976",

|

||||

id="setting_Color_delta_e_method",

|

||||

)

|

||||

],

|

||||

style={

|

||||

"width": "49%",

|

||||

"display": "inline-block",

|

||||

"margin-top": "10px",

|

||||

},

|

||||

)

|

||||

],

|

||||

),

|

||||

],

|

||||

style={"width": "100%", "display": "inline-block"},

|

||||

)

|

||||

return settings_layout

|

||||

|

||||

def _right_output_json(self) -> html.Div:

|

||||

"""Initialize the DetectorDropdown, argument Div and JSON viewer for displaying the analysis output.

|

||||

|

||||

Returns:

|

||||

html.Div: The layout for the JSON viewer.

|

||||

"""

|

||||

right_layout = html.Div(

|

||||

[

|

||||

dbc.Col(

|

||||

[

|

||||

dbc.Row(

|

||||

dcc.Dropdown(

|

||||

options=[

|

||||

"TextDetector",

|

||||

"EmotionDetector",

|

||||

"ColorDetector",

|

||||

],

|

||||

value="TextDetector",

|

||||

id="Dropdown_select_Detector",

|

||||

style={"width": "60%"},

|

||||

),

|

||||

justify="start",

|

||||

),

|

||||

dbc.Row(

|

||||

children=[self._create_setting_layout()],

|

||||

id="div_detector_args",

|

||||

justify="start",

|

||||

),

|

||||

dbc.Row(

|

||||

html.Button(

|

||||

"Run Detector",

|

||||

id="button_run",

|

||||

style={

|

||||

"margin-top": "15px",

|

||||

"margin-bottom": "15px",

|

||||

"margin-left": "11px",

|

||||

"width": "30%",

|

||||

},

|

||||

),

|

||||

justify="start",

|

||||

),

|

||||

dbc.Row(

|

||||

dcc.Loading(

|

||||

id="loading-2",

|

||||

children=[

|

||||

# This is where the json is shown.

|

||||

html.Div(id="right_json_viewer"),

|

||||

],

|

||||

type="circle",

|

||||

),

|

||||

justify="start",

|

||||

),

|

||||

],

|

||||

align="start",

|

||||

)

|

||||

]

|

||||

)

|

||||

return right_layout

|

||||

|

||||

def run_server(self, port: int = 8050) -> None:

|

||||

"""Run the Dash server to start the analysis explorer.

|

||||

|

||||

|

||||

Args:

|

||||

port (int, optional): The port number to run the server on (default: 8050).

|

||||

"""

|

||||

|

||||

self.app.run_server(debug=True, port=port)

|

||||

|

||||

# Dash callbacks

|

||||

def update_picture(self, img_path: str):

|

||||

"""Callback function to update the displayed image.

|

||||

|

||||

Args:

|

||||

img_path (str): The path of the selected image.

|

||||

|

||||

Returns:

|

||||

Union[PIL.PngImagePlugin, None]: The image object to be displayed

|

||||

or None if the image path is

|

||||

|

||||

"""

|

||||

if img_path is not None:

|

||||

image = Image.open(img_path)

|

||||

return image

|

||||

else:

|

||||

return None

|

||||

|

||||

def _update_detector_setting(self, setting_input):

|

||||

# return settings_TextDetector -> style, settings_EmotionDetector -> style

|

||||

display_none = {"display": "none"}

|

||||

display_flex = {

|

||||

"display": "flex",

|

||||

"flexWrap": "wrap",

|

||||

"width": 400,

|

||||

"margin-top": "20px",

|

||||

}

|

||||

|

||||

if setting_input == "TextDetector":

|

||||

return display_flex, display_none, display_none, display_none

|

||||

|

||||

if setting_input == "EmotionDetector":

|

||||

return display_none, display_flex, display_none, display_none

|

||||

|

||||

if setting_input == "ColorDetector":

|

||||

return display_none, display_none, display_flex, display_none

|

||||

|

||||

else:

|

||||

return display_none, display_none, display_none, display_none

|

||||

|

||||

def _right_output_analysis(

|

||||

self,

|

||||

n_clicks,

|

||||

all_img_options: dict,

|

||||

current_img_value: str,

|

||||

detector_value: str,

|

||||

settings_text_analyse_text: list,

|

||||

setting_privacy_env_var: str,

|

||||

setting_emotion_emotion_threshold: int,

|

||||

setting_emotion_race_threshold: int,

|

||||

setting_emotion_gender_threshold: int,

|

||||

setting_emotion_env_var: str,

|

||||

setting_color_delta_e_method: str,

|

||||

) -> dict:

|

||||

"""Callback function to perform analysis on the selected image and return the output.

|

||||

|

||||

Args:

|

||||

all_options (dict): The available options in the file explorer dropdown.

|

||||

current_value (str): The current selected value in the file explorer dropdown.

|

||||

|

||||

Returns:

|

||||

dict: The analysis output for the selected image.

|

||||

"""

|

||||

identify_dict = {

|

||||

"EmotionDetector": faces.EmotionDetector,

|

||||

"TextDetector": text.TextDetector,

|

||||

"ColorDetector": colors.ColorDetector,

|

||||

}

|

||||

|

||||

# Get image ID from dropdown value, which is the filepath

|

||||

if current_img_value is None:

|

||||

return {}

|

||||

image_id = all_img_options[current_img_value]

|

||||

# copy image so prvious runs don't leave their default values in the dict

|

||||

image_copy = self.mydict[image_id].copy()

|

||||

|

||||

# detector value is the string name of the chosen detector

|

||||

identify_function = identify_dict[detector_value]

|

||||

|

||||

if detector_value == "TextDetector":

|

||||

analyse_text = (

|

||||

True if settings_text_analyse_text == ["Analyse text"] else False

|

||||

)

|

||||

detector_class = identify_function(

|

||||

image_copy,

|

||||

analyse_text=analyse_text,

|

||||

accept_privacy=(

|

||||

setting_privacy_env_var

|

||||

if setting_privacy_env_var

|

||||

else "PRIVACY_AMMICO"

|

||||

),

|

||||

)

|

||||

elif detector_value == "EmotionDetector":

|

||||

detector_class = identify_function(

|

||||

image_copy,

|

||||

emotion_threshold=setting_emotion_emotion_threshold,

|

||||

race_threshold=setting_emotion_race_threshold,

|

||||

gender_threshold=setting_emotion_gender_threshold,

|

||||

accept_disclosure=(

|

||||

setting_emotion_env_var

|

||||

if setting_emotion_env_var

|

||||

else "DISCLOSURE_AMMICO"

|

||||

),

|

||||

)

|

||||

elif detector_value == "ColorDetector":

|

||||

detector_class = identify_function(

|

||||

image_copy,

|

||||

delta_e_method=setting_color_delta_e_method,

|

||||

)

|

||||

else:

|

||||

detector_class = identify_function(image_copy)

|

||||

analysis_dict = detector_class.analyse_image()

|

||||

|

||||

# Initialize an empty dictionary

|

||||

new_analysis_dict = {}

|

||||

|

||||

# Iterate over the items in the original dictionary

|

||||

for k, v in analysis_dict.items():

|

||||

# Check if the value is a list

|

||||

if isinstance(v, list):

|

||||

# If it is, convert each item in the list to a string and join them with a comma

|

||||

new_value = ", ".join([str(f) for f in v])

|

||||

else:

|

||||

# If it's not a list, keep the value as it is

|

||||

new_value = v

|

||||

|

||||

# Add the new key-value pair to the new dictionary

|

||||

new_analysis_dict[k] = new_value

|

||||

|

||||

df = pd.DataFrame([new_analysis_dict]).set_index("filename").T

|

||||

df.index.rename("filename", inplace=True)

|

||||

return dbc.Table.from_dataframe(

|

||||

df, striped=True, bordered=True, hover=True, index=True

|

||||

)

|

||||

405

ammico/faces.py

Обычный файл

@ -0,0 +1,405 @@

|

||||

import cv2

|

||||

import numpy as np

|

||||

import os

|

||||

import shutil

|

||||

import pathlib

|

||||

from tensorflow.keras.models import load_model

|

||||

from tensorflow.keras.applications.mobilenet_v2 import preprocess_input

|

||||

from tensorflow.keras.preprocessing.image import img_to_array

|

||||

import keras.backend as K

|

||||

from deepface import DeepFace

|

||||

from retinaface import RetinaFace

|

||||

from ammico.utils import DownloadResource, AnalysisMethod

|

||||

|

||||

|

||||

DEEPFACE_PATH = ".deepface"

|

||||

# alternative solution to the memory leaks

|

||||

# cfg = K.tf.compat.v1.ConfigProto()

|

||||

# cfg.gpu_options.allow_growth = True

|

||||

# K.set_session(K.tf.compat.v1.Session(config=cfg))

|

||||

|

||||

|

||||

def deepface_symlink_processor(name):

|

||||

def _processor(fname, action, pooch):

|

||||

if not os.path.exists(name):

|

||||

# symlink does not work on windows

|

||||

# use copy if running on windows

|

||||

if os.name != "nt":

|

||||

os.symlink(fname, name)

|

||||

else:

|

||||

shutil.copy(fname, name)

|

||||

return fname

|

||||

|

||||

return _processor

|

||||

|

||||

|

||||